1. Introduction

In the modern computing world, the performance of a computer system largely depends on how efficiently it can access and process data. The Central Processing Unit (CPU) is responsible for executing instructions, but its speed is limited by how fast data and instructions can be supplied to it. To overcome the performance gap between the fast CPU and the relatively slower main memory, computer designers developed a concept known as Memory Hierarchy.

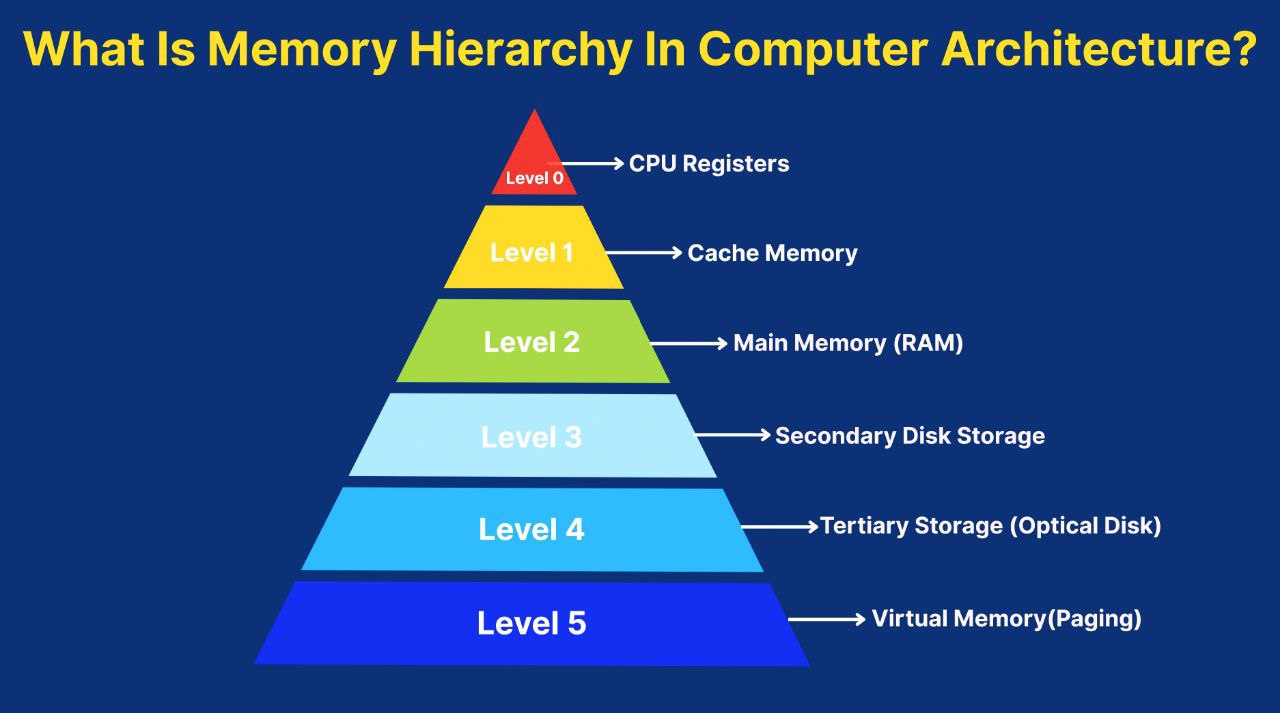

The memory hierarchy is a structured arrangement of different types of computer memory. It is organized in levels, with each level having different speeds, capacities, and costs. The fastest memories (like registers and cache) are closest to the CPU and are smaller in size, whereas the slower memories (like hard drives and cloud storage) are larger and cheaper.

The idea of memory hierarchy is to ensure that the CPU always has quick access to the most frequently used data while maintaining a balance between speed, cost, and storage capacity.

2. Meaning and Definition of Memory Hierarchy

The term Memory Hierarchy refers to a system that arranges storage devices in a hierarchical order based on their speed, cost per bit, and capacity.

The principle behind memory hierarchy is that faster storage technologies are more expensive and hence used in smaller amounts. Slower but cheaper technologies are used to provide large storage capacity.

Definition:

“Memory hierarchy is an arrangement of various storage devices in a computer system in such a way that the memory with the fastest access speed and smallest size is placed closest to the CPU, while the memory with the slowest speed and largest size is placed farthest from the CPU.”

3. Need for Memory Hierarchy

The need for a memory hierarchy arises because of the speed gap between the CPU and main memory. CPUs have become extremely fast over the years, but memory speeds have not increased proportionally.

If the CPU has to wait every time it needs data from the main memory or storage device, the system performance will drop drastically. Therefore, a hierarchy of memories is used to keep frequently accessed data in faster storage, while less frequently used data is stored in slower memory.

Main reasons for memory hierarchy:

- To bridge the gap between processor speed and memory speed.

- To increase the overall performance of the computer.

- To minimize the average access time of data.

- To balance cost and capacity effectively.

- To provide a systematic way of data storage and retrieval.

4. Characteristics of Memory Hierarchy

Each level of the memory hierarchy has distinct characteristics based on three main parameters:

- Speed: Higher levels (like registers and cache) have faster access times.

- Cost per bit: Faster memory is more expensive.

- Capacity: Lower levels (like hard disks) have greater storage capacity.

The key idea is that as we move away from the CPU, the memory becomes slower, cheaper, and larger.

| Level | Type of Memory | Speed | Cost per bit | Capacity | Location |

|---|---|---|---|---|---|

| L1 | Registers | Fastest | Highest | Very Low | Inside CPU |

| L2 | Cache | Very Fast | High | Low | Near CPU |

| L3 | Main Memory (RAM) | Fast | Moderate | Moderate | On motherboard |

| L4 | Secondary Storage (HDD, SSD) | Slow | Low | High | External/Internal |

| L5 | Tertiary Storage (Cloud, Tape) | Slowest | Lowest | Very High | External |

5. Structure of Memory Hierarchy

The memory hierarchy can be visualized as a pyramid.

- The top levels of the pyramid (Registers and Cache) are fast and small.

- The bottom levels (Hard Disk, Cloud Storage) are slow and large.

+-----------------------+

| Registers | (Fastest, Smallest)

+-----------------------+

| Cache |

+-----------------------+

| Main Memory |

+-----------------------+

| Secondary Storage |

+-----------------------+

| Tertiary Storage | (Slowest, Largest)

+-----------------------+

Each level acts as a buffer for the next lower level, storing copies of frequently accessed data to improve performance.

6. Levels of Memory Hierarchy

6.1 Registers

Registers are the fastest and smallest type of memory in the computer system. They are located inside the CPU and hold data and instructions that the processor is currently working on.

Characteristics:

- Size: Few bytes to a few kilobytes.

- Access time: 0.25 to 1 nanosecond.

- Cost: Very high.

- Managed directly by the CPU.

Types of Registers:

- Instruction Register (IR): Stores the instruction currently being executed.

- Program Counter (PC): Holds the address of the next instruction.

- Accumulator (AC): Stores intermediate results of arithmetic operations.

- General Purpose Registers: Temporarily store data during program execution.

Registers are crucial for CPU efficiency because all arithmetic and logical operations are performed using data stored in registers.

6.2 Cache Memory

Cache memory is a small, high-speed memory located between the CPU and the main memory (RAM). It stores frequently accessed data and instructions to reduce the average time to access memory.

Purpose:

Cache acts as a buffer between the CPU and the slower main memory.

Characteristics:

- Speed: Faster than RAM but slower than registers.

- Capacity: Typically a few MB to a few GB.

- Cost: High, but lower than registers.

- Data stored in cache is temporary and frequently updated.

Types of Cache:

- L1 Cache:

- Located inside the CPU chip.

- Fastest and smallest.

- Access time: ~1 ns.

- L2 Cache:

- Located near or within the CPU.

- Larger but slower than L1.

- Access time: ~2–5 ns.

- L3 Cache:

- Shared among multiple processor cores.

- Improves multi-core performance.

Functions of Cache:

- Stores copies of frequently accessed memory locations.

- Reduces CPU idle time.

- Improves data access speed.

6.3 Main Memory (Primary Memory)

Main Memory, commonly known as RAM (Random Access Memory), is the primary storage used by the computer during operation. It stores data, programs, and intermediate results needed for processing.

Characteristics:

- Volatile – data is lost when power is turned off.

- Access time: 5–20 nanoseconds.

- Larger capacity than cache.

- Communicates directly with the CPU via buses.

Types of RAM:

- Static RAM (SRAM):

- Faster, uses flip-flops to store data.

- Used in cache memory.

- Dynamic RAM (DRAM):

- Slower, uses capacitors.

- Cheaper and used for main memory.

Functions:

- Holds the operating system, software, and active processes.

- Supplies instructions to the CPU.

- Temporarily stores data during program execution.

6.4 Secondary Memory

Secondary memory, also known as external or auxiliary storage, provides permanent data storage. It is non-volatile and has a much larger capacity than main memory but slower access times.

Examples:

- Hard Disk Drives (HDDs)

- Solid State Drives (SSDs)

- CDs, DVDs, and Blu-ray discs

- USB Flash Drives

Characteristics:

- Non-volatile – data remains even after power off.

- Access time: milliseconds.

- Cost per bit: very low.

- Used for long-term storage of files and programs.

Functions:

- Stores operating system, software, and user files permanently.

- Backs up data for future use.

- Provides data to the CPU when required.

6.5 Tertiary Memory

Tertiary storage refers to devices used for backup, archiving, and storing huge amounts of rarely accessed data.

Examples:

- Magnetic tapes

- Optical disks (used for backup)

- Cloud storage services (Google Drive, Dropbox, etc.)

Characteristics:

- Very large storage capacity.

- Slowest access speed.

- Cost per bit is the lowest.

- Often requires human or robotic handling (in physical storage systems).

Functions:

- Used for long-term storage and backup.

- Stores old data, large databases, and system logs.

- Provides disaster recovery solutions.

7. Working of Memory Hierarchy

The memory hierarchy works on the principle of temporal and spatial locality of reference.

7.1 Temporal Locality

If a particular data or instruction is accessed once, there is a high chance it will be accessed again soon. Hence, it should be kept in a faster memory like cache.

7.2 Spatial Locality

If a memory location is accessed, nearby memory locations are likely to be accessed soon. So, data around that address is also fetched and stored in cache or registers.

Example:

When the CPU needs data:

- It first checks registers.

- If not found, it looks in cache memory.

- If not in cache, it fetches from main memory.

- If still not found, it goes to secondary storage.

This process minimizes data access time and maximizes speed.

8. Memory Performance and Access Time

The efficiency of a memory hierarchy is measured using Average Memory Access Time (AMAT).

Formula: AMAT=Hit Time+(Miss Rate×Miss Penalty)AMAT = Hit\ Time + (Miss\ Rate \times Miss\ Penalty)AMAT=Hit Time+(Miss Rate×Miss Penalty)

Where:

- Hit Time: Time to access data in the faster memory.

- Miss Rate: Fraction of accesses not found in the faster memory.

- Miss Penalty: Time to retrieve data from the slower memory.

The goal is to minimize AMAT by increasing the hit rate and reducing miss penalties.

9. Advantages of Memory Hierarchy

- Improved Performance: Reduces CPU waiting time for data.

- Cost Efficiency: Balances expensive fast memory with cheaper large memory.

- High Storage Capacity: Combines multiple memory types for large total capacity.

- Scalability: New levels of memory can be added.

- Data Accessibility: Frequently used data remains available at higher speed.

10. Disadvantages of Memory Hierarchy

- Complex Design: Requires efficient algorithms to manage data flow.

- High Initial Cost: Due to the use of cache and high-speed memory.

- Cache Coherency Issues: In multi-core systems, synchronization can be difficult.

- Energy Consumption: High-speed memories consume more power.

11. Techniques for Memory Management

To ensure smooth data transfer across the hierarchy, several techniques are used:

- Paging and Segmentation: Divides memory into blocks for efficient access.

- Cache Replacement Policies:

- Least Recently Used (LRU)

- First In First Out (FIFO)

- Random Replacement

- Prefetching: Anticipates and loads data before it is requested.

- Virtual Memory: Allows the system to use disk space as additional RAM.

12. Modern Trends in Memory Hierarchy

- Hybrid Memory Systems: Combine DRAM and Non-Volatile Memory (NVM) for speed and durability.

- 3D Memory Technology: Increases density and reduces access delay.

- Persistent Memory: Bridges the gap between DRAM and storage by retaining data after power loss.

- Cloud-Based Memory Systems: Remote data storage accessible through the internet.

13. Future of Memory Hierarchy

The gap between processor and memory speed is still a challenge. Future developments focus on:

- Integrating memory and processors on the same chip.

- Using AI-driven caching algorithms for predictive data placement.

- Exploring quantum and optical storage for ultra-fast data access.

14. Conclusion

The memory hierarchy is a fundamental concept in computer organisation that enhances performance, efficiency, and cost-effectiveness of computing systems. It bridges the speed gap between the CPU and memory by using multiple storage levels — each optimized for different purposes.

By placing frequently used data in faster memory and less-used data in slower memory, computers can operate at near-optimal speeds without significantly increasing costs. Understanding memory hierarchy is essential for anyone studying computer science or information technology, as it forms the backbone of modern computing architectures.

In conclusion, the success of a computer system lies in how efficiently its memory hierarchy is designed and managed, ensuring that data flows seamlessly between the CPU and storage.